Chapter 1: The history of early computing technology

1.3 Electronic devices

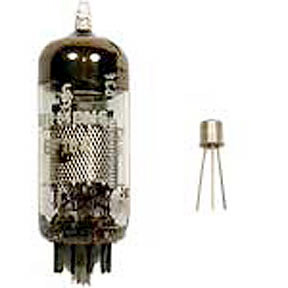

The turn of the century saw a significant number of electronics-related contributions. One of the most significant was the vacuum tube, invented by Lee de Forest in 1906. It was an improvement on the Fleming tube, or Fleming valve, introduced by John Ambrose Fleming two years earlier. The vacuum tube contains three components: the anode, the cathode and a control grid. It could therefore control the flow of electrons between the anode and cathode using the grid, and could therefore act as a switch or an amplifier. A PBS special on the history of the computer used monkeys (the cathode) throwing pebbles (the electrons) through a gate (the grid) at a target (the anode) to explain the operation of the triad tube.

Movie 1.1 Simulation of a Vacuum tube

with Host Ira Flatow, from PBS “Transistorized!”

http://www.pbs.org/transistor/science/events/vacuumt.html

http://www.pbs.org/transistor/album1/addlbios/deforest.html

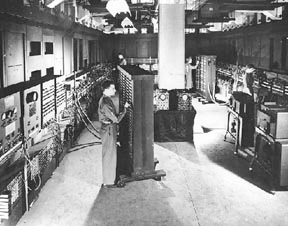

Engineers were interested in the functionality of the vacuum tube, but were intent on discovering an alternative. Much like a light bulb, the vacuum tube generated a lot of heat and had a tendency to burn out in a very short time. It required a lot of electricity, and it was slow and big and bulky, requiring fairly large enclosures. For example, the first digital computer (the ENIAC) weighed over thirty tons, consumed 200 kilowatts of electrical power, and contained around 19,000 vacuum tubes that got very hot very fast, and as a result constantly burned out, making it very unreliable.

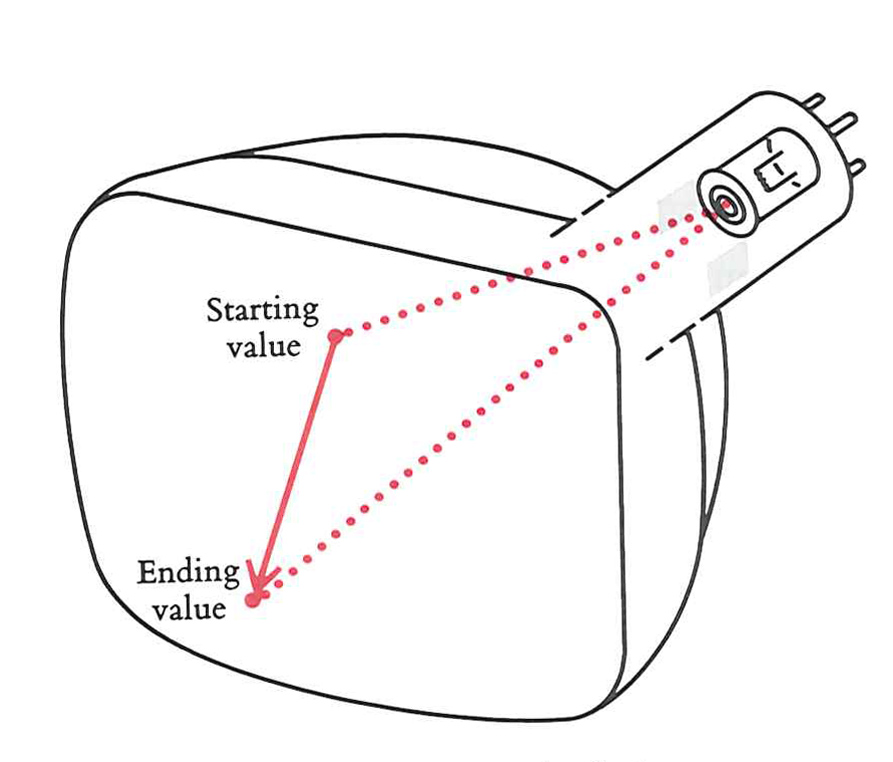

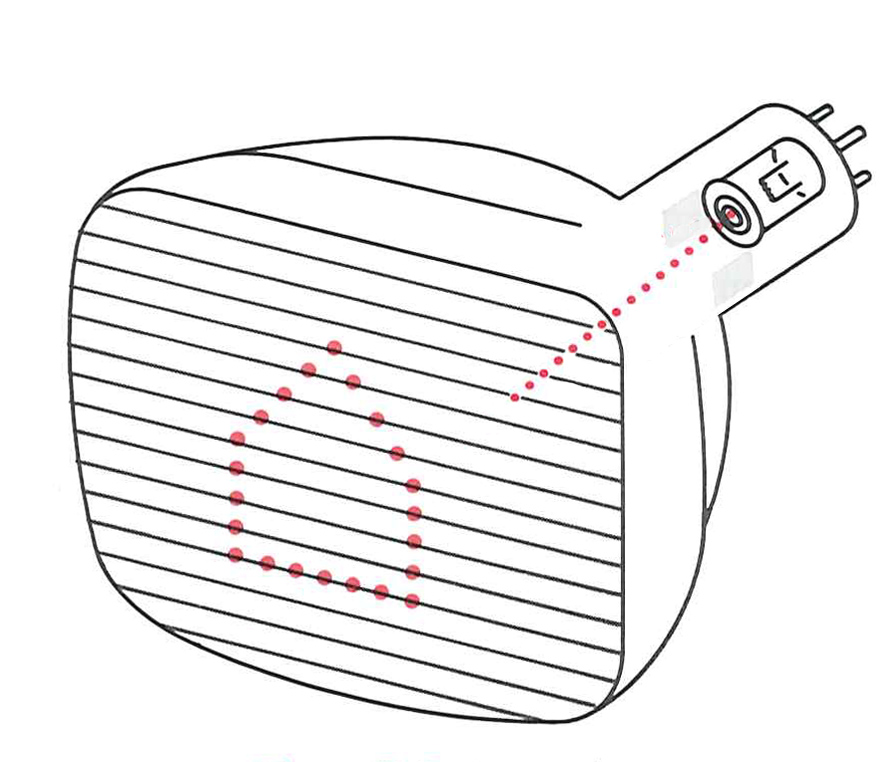

A special kind of vacuum tube was invented in 1885. Called the Cathode Ray Tube (CRT), images are produced when an electron beam generated by the cathode strikes a phosphorescent anode surface. The practicality of this tube was shown in 1897, when German scientist Ferdinand Braun introduced a CRT with a fluorescent screen, known as the cathode ray oscilloscope. The screen would emit a visible light when struck by a beam of electrons.

This invention would result in the introduction of the modern television when Idaho native Philo Farnsworth introduced the image dissector in 1927, and the first 60 line “raster-scanned” image was shown (it was an image of a dollar sign.) Farnsworth has been called one of the greatest inventors of all times, but he suffered for a long period of time in obscurity because of an unfortunate set of circumstances. RCA challenged the patents that Farnsworth received in 1930 for the technology which was the television, and although he won the litigation, it took so long that his patents expired and RCA maintained a public relations campaign to promote one of their engineers as the actual inventor (see sidebar below.)

Variations of the CRT have been used throughout the history of computer graphics, and it was the graphics display device of choice until the LCD display was introduced 100 years later. The three main variations of the CRT are the vector display, a “storage tube” CRT (developed in 1949), and the raster display.

For more information: http://entertainment.howstuffworks.com/tv3.htm

The following text is from Time Magazine’s accounting of the 100 great inventors of all time:

“As it happens, [Vladamir] Zworykin had made a patent application in 1923, and by 1933 had developed a camera tube he called an Iconoscope. It also happens that Zworykin was by then connected with the Radio Corporation of America, whose chief, David Sarnoff, had no intention of paying royalties to [Philo]Farnsworth for the right to manufacture television sets. “RCA doesn’t pay royalties,” he is alleged to have said, “we collect them.”

And so there ensued a legal battle over who invented television. RCA’s lawyers contended that Zworykin’s 1923 patent had priority over any of Farnsworth’s patents, including the one for his Image Dissector. RCA’s case was not strong, since it could produce no evidence that in 1923 Zworykin had produced an operable television transmitter. Moreover, Farnsworth’s old [high school] teacher, [Justin] Tolman, not only testified that Farnsworth had conceived the idea when he was a high school student, but also produced the original sketch of an electronic tube that Farnsworth had drawn for him at that time. The sketch was almost an exact replica of an Image Dissector.

In 1934 the U.S. Patent Office rendered its decision, awarding priority of invention to Farnsworth. RCA appealed and lost, but litigation about various matters continued for many years until Sarnoff finally agreed to pay Farnsworth royalties.

But he didn’t have to for very long. During World War II, the government suspended sales of TV sets, and by the war’s end, Farnsworth’s key patents were close to expiring. When they did, RCA was quick to take charge of the production and sales of TV sets, and in a vigorous public-relations campaign, promoted both Zworykin and Sarnoff as the fathers of television.”

Ref: http://www.time.com/time/time100/scientist/profile/farnsworth.html

and

http://www.farnovision.com/chronicles/

The specter of World War II, and the need to calculate complex values, e.g. weapons trajectories and firing tables to be used by the Army, pushed the military to replace their mechanical computers, which were error prone. Lt. Herman Goldstine of the Aberdeen Proving Grounds contracted with two professors at the University of Pennsylvania’s Moore School of Engineering to design a digital device. Dr. John W. Mauchly and J. P. Eckert, Jr., professors at the school, were awarded a contract in 1943 to develop the preliminary designs for this electronic computer. The ENIAC (Electronic Numerical Integrator and Computer) was placed in operation at the Moore School in 1944. Final assembly took place during the fall of 1945, and it was formally announced in 1946.

The ENIAC was the prototype from which most other modern computers evolved. All of the major components and concepts of today’s digital computers were embedded in the design. ENIAC knew the difference in the sign of a number, it could compare numbers, add, subtract, multiply, divide, and compute square roots. Its electronic accumulators combined the functions of an adding machine and storage unit. No central memory unit existed, and storage was localized within the circuitry of the computer.

The primary aim of the designers was to achieve speed by making ENIAC as all-electronic as possible. The only mechanical elements in the final product were actually external to the calculator itself. These were an IBM card reader for input, a card punch for output, and the 1,500 associated relays.

An interesting side note occurred after the delivery of the prototype to the military, when Eckert and Mauchly formed a company to commercialize the computer. Disputes arose over who owned the patents for the design, and the professors were forced to resign from the faculty of the University of Pennsylvania. The concept of “technology transfer” from the university research labs to the private sector, which is common today, had no counterpart in the late 1940s and even into the 1980s.

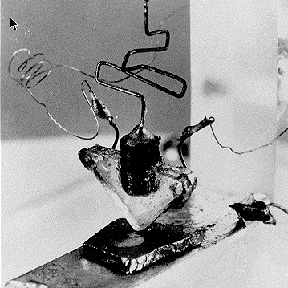

The revolution in electronics can be traced to the tube’s successful replacement with the discovery of the transistor[1] in 1947 by a team at Bell Labs (Shockley, Bardeen and Brattain). Based on the semiconductor technology, the transistor, like the vacuum tube, functioned as a switch or an amplifier. Unlike the tube, the transistor was small, had a very stable temperature, was fast and very reliable. Because of it’s size and low heat, it could be arranged in large numbers in a small area, allowing the devices built from it to decrease significantly in size.

The transistor still had to be soldered into the circuits by hand, so the size was still limited. The ubiquitous presence of the transistor resulted in all sorts of mid-sized devices, from radios to computers that were introduced in the 1950s.

The ENIAC patents (which covered basic patents relating to the design of electronic digital computers) were filed in 1947 by John W. Mauchly and J. Presper Eckert arising from the work conducted at the Moore School of Electrical Engineering at the University of Pennsylvania. In 1946, Eckert and Mauchly left the Moore School and formed their own commercial computer enterprise, the Electronic Control Company, which was later incorporated as the Eckert-Mauchly Computer Corporation. In 1950 Remington Rand acquired Eckert-Mauchly and the rights to the ENIAC patent eventually passed to Sperry Rand as a result of a merger of the Sperry Corporation and Remington Rand in 1955. After the patent was granted to the Sperry Rand Corporation in 1964, the corporation demanded royalties from all major participants in the computer industry. Honeywell refused to cooperate, so Sperry Rand then filed a patent infringement suit against Honeywell in 1967. Honeywell responded in the same year with an antitrust suit charging that the Sperry Rand-IBM cross-licensing agreement was a conspiracy to monopolize the computer industry, and also that the ENIAC patent was fraudulently procured and invalid.

Ref: Charles Babbage Institute, Honeywell vs. Sperry Litigation Records, 1947-1972; Also see a first-person accounting by Charles McTiernan in an Anecdote article in the Annals of the History of Computing

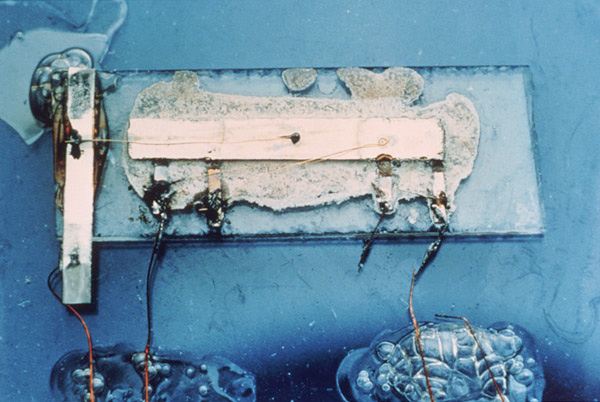

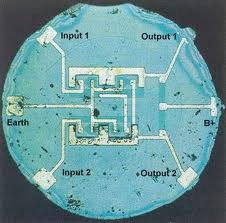

The next breakthrough which was credited with spawning an entire industry of miniature electronics came in 1958 with the discovery (independently by two individuals) of the integrated circuit. The integrated circuit (IC, or Chip), invented by Jack St. Clair Kilby of Texas Instruments (later winner of the Nobel Prize in physics and one of the designers of the TI hand-held calculator) and Robert Noyce of Fairchild Electronics (later one of the founders of Intel), allowed the entire circuit (transistors, capacitors, resistors, wires, …) to be made out of silicon on a single board.

In the design of a circuit, connections must be of primary importance, so that the electrical current can traverse the entire circuit. In early circuit construction, several problems contributed to failure. First, manual assembly of huge numbers of tiny components resulted in many faulty connections. The second problem was one of size: if the components are too large or connections too long, electric signals can’t travel fast enough through the circuit.

In 1958 Kilby came up with his solution to the miniaturization problem: make all the components and the chip out of the same block (monolith) of semiconductor material. Making all the parts out of the same block of material and adding the metal needed to connect them as a layer on top of it eliminated the need for individual discrete components. Circuits could be made smaller and the manual assembly part of the manufacturing process could be eliminated.

Noyce’s solution, introduced six months later, solved several problems that Kilby’s circuit had, mainly the problem of connecting all the components on the monolith. His solution was to add the metal as a final layer, and then remove enough to provide the desired flow of electrons; thus the “wires” needed to connect the components were formed. This made the integrated circuit more suitable for mass production.

http://www.chiphistory.org/fairchild_ic.htm

The Encyclopedia of Computer Science identifies the Atanasoff–Berry Computer (ABC) as the first electronic digital computing device. Conceived in 1937, the machine was not programmable, being designed only to solve systems of linear equations. It was successfully tested in 1942. It was the first computing machine to use electricity, vacuum tubes, binary numbers and capacitors. The capacitors were in a rotating drum that held the electrical charge for the memory.

However, its intermediate result storage mechanism, a paper card writer/reader, was unreliable, and when inventor John Vincent Atanasoff left Iowa State College for World War II assignments, work on the machine was discontinued. The ABC pioneered important elements of modern computing, including binary arithmetic and electronic switching elements, but its special-purpose nature and lack of a changeable, stored program distinguish it from modern computers. The computer was designated an IEEE Milestone in 1990.

Atanasoff and Clifford Berry’s computer work was not widely known until it was rediscovered in the 1960s, amidst conflicting claims about the first instance of an electronic computer. At that time, the ENIAC was considered to be the first computer in the modern sense, but in 1973 a U.S. District Court invalidated the ENIAC patent and concluded that the ENIAC inventors had derived the subject matter of the electronic digital computer from Atanasoff. The judge stated “Eckert and Mauchly did not themselves first invent the automatic electronic digital computer, but instead derived that subject matter from one Dr. John Vincent Atanasoff.”