Chapter 17: Virtual Environments

17.4 Interaction

One of the first instrumented gloves described in the literature was the Sayre Glove, developed by Tom Defanti and Daniel Sandin in a 1977 project for the National Endowment for the Arts. (In 1962 Uttal from IBM patented a glove for teaching touch typing, but it was not general purpose enough to be used in VR applications.) The Sayre glove used light based sensors with flexible tubes with a light source at one end and a photocell at the other. As the fingers were bent, the amount of light that hit the photocells varied, thus providing a measure of finger flexion. The glove, based on an idea by colleague Rich Sayre, was an inexpensive, lightweight glove that could monitor hand movements by measuring the metacarpophalangeal joints of the hand. It provided an effective method for multidimensional control, such as mimicking a set of sliders.

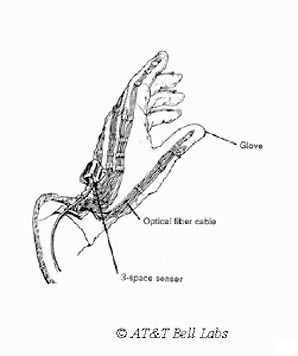

The first widely recognized device for measuring hand positions was developed by Dr. Gary Grimes at Bell Labs. Patented in 1983, Grimes’ Digital Data Entry Glove had finger flex sensors, tactile sensors at the fingertips, orientation sensing and wrist-positioning sensors. The positions of the sensors themselves were changeable. It was intended for creating “alpha-numeric” characters by examining hand positions. It was primarily designed as an alternative to keyboards, but it also proved to be effective as a tool for allowing non-vocal users to “finger-spell” words using such a system.

This was soon followed by an optical glove, which was later to become the VPL DataGlove. This glove was built by Thomas Zimmerman, who also patented the optical flex sensors used by the gloves. Like the Sayre glove, these sensors had fibre optic cables with a light at one end, and a photodiode at the other. Zimmerman had also built a simplified version, called the Z-glove, which he had attached to his Commodore 64. This device measured the angles of each of the first two knuckles of the fingers using the fibre optic devices, and was usually combined with a Polhemus tracking device. Some also had abduction measurements. This was really the first commercially available glove, however at about $9000 was prohibitively expensive.

The Dataglove (originally developed by VPL Research) was a neoprene fabric glove with two fiber optic loops on each finger. Each loop was dedicated to one knuckle, which occasionally caused a problem. If a user had extra large or small hands, the loops would not correspond very well to the actual knuckle position and the user was not able to produce very accurate gestures. At one end of each loop was an LED and at the other end a photosensor. The fiber optic cable had small cuts along its length. When the user bent a finger, light escaped from the fiber optic cable through these cuts. The amount of light reaching the photosensor was measured and converted into a measure of how much the finger was bent. The Dataglove required recalibration for each user, and often for the same user over a session’s duration. Coupled with a problem of fatigue (because of the stiffness) it failed to reach the market penetration that was anticipated.

Again from the National Academy of Sciences report:

The basic technologies developed through VR research have been applied in a variety of ways over the last several decades. One line of work led to applications of VR in biochemistry and medicine. This work began in the 1960s at the University of North Carolina (UNC) at Chapel Hill. The effort was launched by Frederick Brooks, who was inspired by Sutherland’s vision of the ultimate display as enabling a user to see, hear, and feel in the virtual world. Flight simulators had incorporated sound and haptic feedback for some time. Brooks selected molecular graphics as the principal driving problem of his program. The goal of Project GROPE, started by Brooks in 1967, was to develop a haptic interface for molecular forces. The idea was that, if the force constraints on particular molecular combinations could be “felt,” then the designer of molecules could more quickly identify combinations of structures that could dock with one another.

GROPE-I was a 2D system for continuous force fields. GROPE II was expanded to a full six-dimensional (6D) system with three forces and three torques. The computer available for GROPE II in 1976 could produce forces in real time only for very simple world models — a table top; seven child’s blocks; and the tongs of the Argonne Remote Manipulator (ARM), a large mechanical device. For real-time evaluation of molecular forces, Brooks and his team estimated that 100 times more computing power would be necessary. After building and testing the GROPE II system, the ARM was mothballed and the project was put on hold for about a decade until 1986, when VAX computers became available. GROPE III, completed in 1988, was a full 6D system. Brooks and his students then went on to build a full-molecular-force-field evaluator and, with 12 experienced biochemists, tested it in GROPE IIIB experiments in 1990. In these experiments, the users changed the structure of a drug molecule to get the best fit to an active site by manipulating up to 12 twistable bonds.

The test results on haptic visualization were extremely promising. The subjects saw the haptic display as a fast way to test many hypotheses in a short time and set up and guide batch computations. The greatest promise of the technique, however, was not in saving time but in improving situational awareness. Chemists using the method reported better comprehension of the force fields in the active site and of exactly why each particular candidate drug docked well or poorly. Based on this improved grasp of the problem, users could form new hypotheses and ideas for new candidate drugs.

The docking station is only one of the projects pursued by Brooks’s group at the UNC Graphics Laboratory. The virtual world envisioned by Sutherland would enable scientists or engineers to become immersed in the world rather than simply view a mathematical abstraction through a window from outside. The UNC group has pursued this idea through the development of what Brooks calls “intelligence-amplifying systems.” Virtual worlds are a subclass of intelligence-amplifying systems, which are expert systems that tie the mind in with the computer, rather than simply substitute a computer for a human.

In 1970, Brooks’s laboratory was designated as an NIH Research Resource in Molecular Graphics, with the goal of developing virtual worlds of technology to help biochemists and molecular biologists visualize and understand their data and models. During the 1990s, UNC has collaborated with industry sponsors such as HP to develop new architectures incorporating 3D graphics and volume-rendering capabilities into desktop computers (HP later decided not to commercialize the technology).

Since 1985, NSF funding has enabled UNC to pursue the Pixel-Planes project, with the goal of constructing an image-generation system capable of rendering 1.8 million polygons per second and a head-mounted display system with a lagtime under 50 milliseconds. This project is connected with GROPE and a large software project for mathematical modeling of molecules, human anatomy, and architecture. It is also linked to VISTANET, in which UNC and several collaborators are testing high-speed network technology for joining a radiologist who is planning cancer therapy with a virtual world system in his clinic, a Cray supercomputer at the North Carolina Supercomputer Center, and the Pixel-Planes graphics engine in Brooks’s laboratory.

With Pixel-Planes and the new generation of head-mounted displays, the UNC group has constructed a prototype system that enables the notions explored in GROPE to be transformed into a wearable virtual-world workstation. For example, instead of viewing a drug molecule through a window on a large screen, the chemist wearing a head-mounted display sits at a computer workstation with the molecule suspended in front of him in space. The chemist can pick it up, examine it from all sides, even zoom into remote interior dimensions of the molecule. Instead of an ARM gripper, the chemist wears a force-feedback exoskeleton that enables the right hand to “feel” the spring forces of the molecule being warped and shaped by the left hand.

In a similar use of this technology, a surgeon can work on a simulation of a delicate procedure to be performed remotely. A variation on and modification of the approach taken in the GROPE project is being pursued by UNC medical researcher James Chung, who is designing virtual-world interfaces for radiology. One approach is data fusion, in which a physician wearing a head-mounted display in an examination room could, for example, view a fetus by ultrasound imaging superimposed and projected in 3D by a workstation. The physician would see these data fused with the body of the patient. In related experiments with MRI and CT scan data fusion, a surgeon has been able to plan localized radiation treatment of a tumor.

Movie 17.2 UNC Fetal Surgery VR

The term Haptic refers to our sense of touch, and consists of input via mechano-receptors in the skin, neurons which convey information about texture, and the sense of proprioception, which interprets information about the size, weight and shape of objects objects via feedback from muscles and tendons in the hands and other limbs. Haptic Feedback refers to the way we attempt to simulate this haptic sense in our virtual environment, by assigning physical properties to the virtual objects we encounter and designing devices to relay these properties back to the user. Haptic feedback devices use vibrators, air bladders, heat/cold materials, and Titanium-Nickel alloy transducers which provide a minimal sense of touch.

UNC uses a ceiling mounted ARM (Argonne remote manipulator) to test receptor sites for a drug molecule. The researcher, in virtual reality, grasps the drug molecule, and holds it up to potential receptor sites. Good receptor sites attract the drug, while poor ones repel it. Using a force feedback system, scientists can easily feel where the drug can and should go.

http://www.cs.unc.edu/Research/

In 1979, F.H. Raab and others described the technology behind what has been one of the most widely utilized tracking systems in the VR world — the Polhemus. This six degrees of freedom electromagnetic position tracking was based on the application of orthogonal electromagnetic fields. Two varieties of electromagnetic position trackers were implemented — one used alternating current (AC) to generate the magnetic field, and the other used direct current (DC).

In the Polhemus AC system, mutually perpendicular emitter coils sequentially generated AC magnetic fields that induced currents in the receiving sensor, which consisted of three passive mutually perpendicular coils. Sensor location and orientation therefore were computed from the nine induced currents by calculating the small changes in the sensed coordinates and then updating the previous measurements.

In 1964 Bill Polhemus started Polhemus Associates, a 12-person engineering studies company working on projects related to navigation for the U.S. Department of Transportation and similar European and Canadian departments, in Ann Arbor, Michigan. His research was focused on determining an object’s position and orientation in a three-dimensional space.

He relocated the company to Malletts Bay in 1969 and the company went beyond studies and began focusing on hardware. In late 1970, after an influx of what Polhemus called “a very clever team from a division of Northrop Corp. (now Northrop Grumman Corp.) that had a lot of experience in development of miniaturized inertial and magnetic devices,” the firm changed its name to Polhemus Navigation Sciences, later shortened to Polhemus, and incorporated in Vermont.

“The Polhemus system is used to track the orientation of the pilot’s helmet,” Polhemus said of the electromagnetic technology he pioneered. “The ultimate objective is to optically project an image on the visor of the pilot’s helmet so he can look anywhere and have the display that he needs. … It’s critical to know, in a situation like that, where the pilot’s helmet is pointed, so you know what kind of a display to put up on the visor,” he added before comparing the system to a “heads-up display” or “gun sight,” which projects similar data onto an aircraft’s windshield.

Polhemus was supported for a few years in the early 1970s by Air Force contracts. But by late 1973, “in the absence of any equity capital to speak of, we just ran dry,” in his words. “By that time, however, the device looked attractive to a number of companies, and there were several bids for it. We finally wound up selling to the Austin Company,” a large conglomerate with headquarters in Cleveland, Ohio.

The next few years saw the company change hands to McDonnell Douglas Corp. of St. Louis, Mo., and then to Kaiser Aerospace and Electronics Corp. of Foster City, Calif., in 1988.

Ernie Blood was an engineer and Jack Scully a salesman at Polhemus. Blood and Scully created the digitizer used for George Lucas’ groundbreaking Star Wars series, which won an Academy Award for Polhemus (Blood’s name was on the patent.) They had been discussing possible expanded commercial uses for the Polhemus motion tracking technology, possibly in the entertainment field, in training situations, or in the medical field. However, Polhemus was focused on military applications, and was not interested in any other markets. When they took the idea of a spinoff company to their superiors at McDonnell-Douglas, the parent company of Polhemus, they were fired in 1986.

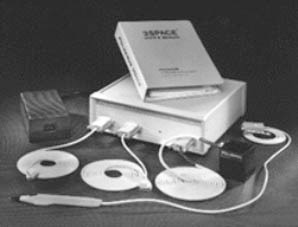

Still convinced that there were commercial possibilities, Blood and Scully started a new company in 1986, which they called Ascension. The first few years were lean years, but Blood improved upon the head-tracking technology for fighter pilots and Scully eventually negotiated a licensing agreement with GEC (General Electric Co. of Great Britain). The contract was put on hold for two years when Polhemus, which had been purchased by Kaiser Aerospace, a direct competitor of GEC, sued Ascension for patent infringement. Polhemus dropped the case shortly before it went to trial, and Ascension, with the financial backing of GEC, was able to stay afloat. The licensing agreement was finalized with GEC, and Ascension sales of equipment based on the technology took off, particularly in the medical field.

When the virtual reality revolution erupted in the early 1990s, Ascension played a part in it, developing motion trackers that could be used in high-priced games. “We decided from the beginning that we were not going to go after a single-segment application,” Blood said. “From day one, we’ve always made sure we were involved in a lot of different markets.” As the VR market declined, this philosophy helped Ascension’s sales stay constant.

A constant for Ascension was its work in the field of animation. Scully says “Ascension deserves some of the credit for inventing real-time animation, in which sensors capture the motions of performers for the instant animation of computerized characters.” Ascension’s Flock of Birds product has been used to capture this motion and define the animated characters. It has been used in the animation of characters in hundreds of television shows (MTV’s CyberCindy, Donkey Kong), commercials (the Pillsbury Doughboy, the Keebler elves), video games (Legend, College Hoops Basketball, SONY’s The Getaway) and movies (Starship Warriors, and pre-animation for Star Wars).

Ascension Technology served six markets: animation, medical imaging, biomechanics, virtual reality, simulation/training and military targeting systems from its facility. Using DC magnetic, AC magnetic, infrared-optical, inertial and laser technologies, Ascension provides turnkey motion capture systems for animated entertainment as well as custom tracking solutions for original equipment manufacturers to integrate into their products.

Sturman, D.J. and Zeltzer, D., A survey of glove-based input, Computer Graphics and Applications, IEEE,

V14 #1, Jan. 1994,30 -39

F. Raab, E. Blood, T. Steiner, and H. Jones, Magnetic position and orientation tracking system, IEEE Transactions on Aerospace and Electronic Systems, Vol. 15, No. 5, 1979, pp. 709-718.

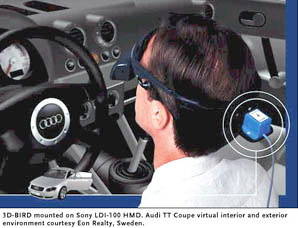

- 3D Bird mounted on the back of a Sony LDI-100 HMD in Audi TT coupe

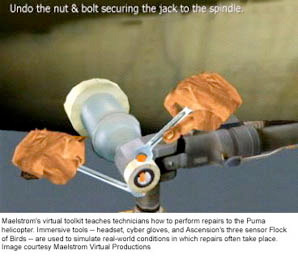

- Maelstrom’s virtual toolkit teaches technicians how to perform repairs on the Puma helicopter, using Ascension Flock of Birds