Chapter 19: Quest for Visual Realism

19.5 Global Illumination

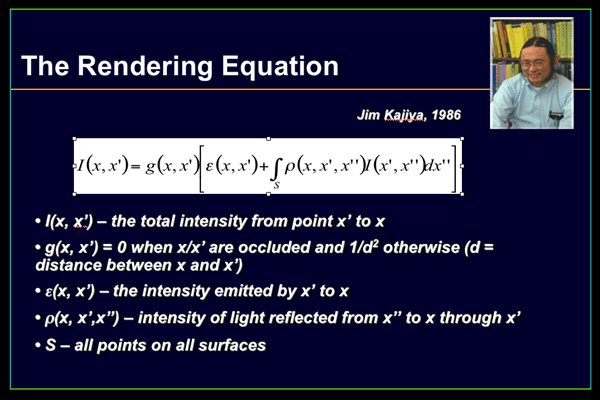

Another important component of complex and realistic images is accurate lighting. Lighting is one of the most complicated of all computer graphics algorithms, and it is also one of the most critical for believable images. The lighting is what gives the surface detail that is keyed to the object’s physical properties. The basis of most lighting approximation techniques is in estimating the amount of light energy being transmitted, reflected or absorbed at a given point on a surface. Almost every reasonable algorithm is derived from the rendering equation, a very important contribution to the field of computer graphics by James T. Kajiya of CalTech in 1986. Kajiya based his idea on the theory of radiative heat transfer.

The intensity of light that travels from point x’ to point x assumes there are no surfaces between to deflect or scatter the light.

I(x, x’) is that energy of radiation per unit time per unit area of source dx’ per unit area dx of the target. In many cases, computer graphics researchers do not deal with joules of energy when talking about intensity of light. Instead, more descriptive terms are used. White, for example, is considered a hot (or high intensity) color while deep blues, purples and very dark shades of grey are cool (or low intensity) colors. Once all calculations are done, the numerical value of I(x, x’) is usually normalized to the range [0.0, 1.0].

The quantity g(x, x’) represents the occlusion between point x’ and point x. The value of g(x, x’) is exactly zero if there is no straight line-of-sight from x’ to x and vice versa. From a geometric standpoint this makes perfect sense. If the geometry of the scene is such that no light can travel between two points, then whatever illumination that x’ provides cannot be absorbed and/or reflected at x. If there is, however, some mutual visibility between the two points, g(x, x’) is equal to the inverse of r squared where r is the distance from x’ to x (a common physics law).

The amount of energy emitted by a surface at point x’ reaching a point x is measured in per unit time per unit area of source per unit area of target. This sounds very similar for the units of transport intensity I. The difference however, is that emittance is also a function of the distance between x’ and x.

Surfaces are often illuminated indirectly. That is, some point x receives scattered light from point x’ that originated from x”. The scattering term is a dimensionless quantity.

As one can conclude from the equation itself, evaluating the integrated intensity I for each point on a surface is a very expensive task. Kajiya, in the paper that introduced the rendering equation, also introduced a Monte Carlo method for approximating the equation. Other good approximations have since been introduced and are widely used, but the theory introduced by Kajiya has influenced the derivation of most alternative approaches. Also, much simplified equations for I(x, x’) are typically substituted in the case of indoor lighting models.

Global illumination refers to a class of algorithms used in 3D computer graphics which, when determining the light falling on a surface, takes into account not only the light which has taken a path directly from a light source (local illumination), but also light which has undergone reflection from other surfaces in the world. This is the situation for most physical scenes that a graphics artist would be interested in simulating.

Images rendered using global illumination algorithms are often considered to be more photorealistic than images rendered using local illumination algorithms. However, they are also much slower and more computationally expensive to create as well. A common approach is to compute the global illumination of a scene and store that information along with the geometry. That stored data can then be used to generate images from different viewpoints within the scene (assuming no lights have been added or deleted). Radiosity, ray tracing, cone tracing and photon mapping are examples of global illumination algorithms.

Radiosity was introduced in 1984 by researchers at Cornell University (Goral, etal) in their paper Modeling the interaction of light between diffuse surfaces. Like Kajiya’s rendering equation, the radiosity method has its basis in the theory of thermal radiation, since radiosity relies on computing the amount of light energy transferred between two surfaces. In order to simplify the algorithm, the radiosity algorithm assumes that this amount is constant across the surfaces (perfect or ideal Lambertian surfaces); this means that to compute an accurate image, geometry in the scene description must be broken down into smaller areas, or patches, which can then be recombined for the final image.

The amount of light energy transfer can be computed by using the known reflectivity of the reflecting patch and the emission quantity of an “illuminating” patch, combined with what is called the form factor of the two patches. This dimensionless quantity is computed from the geometric orientation of the two patches, and can be thought of as the fraction of the total possible emitting area of the first patch which is covered by the second patch.

The form factor can be calculated in a number of ways. Early methods used a hemicube (an imaginary cube centered upon the first surface to which the second surface was projected, devised by Cohen and Greenberg in 1985) to approximate the form factor, which also solved the intervening patch problem. This is quite computationally expensive, because ideally form factors must be derived for every possible pair of patches, leading to a quadratic increase in computation with added geometry.

Ray tracing is one of the most popular methods used in 3D computer graphics to render an image. It works by tracing the path taken by a ray of light through the scene, and calculating reflection, refraction, or absorption of the ray whenever it intersects an object (or the background) in the scene.

For example, starting at a light source, trace a ray of light to a surface, which is transparent but refracts the light beam in a different direction while absorbing some of the spectrum (and altering the color). From this point, the beam is traced until it strikes another surface, which is not transparent. In this case the light undergoes both absorption (further changing the color) and reflection (changing the direction). Finally, from this second surface it is traced directly into the virtual camera, where its resulting color contributes to the final rendered image.

Ray tracing’s popularity stems from its realism over other rendering methods; effects such as reflections and shadows, which are difficult to simulate in other algorithms, follow naturally from the ray tracing algorithm. The main drawback of ray tracing is that it can be an extremely slow process, due mainly to the large numbers of light rays which need to be traced, and the larger number of potentially complicated intersection calculations between light rays and geometry (the result of which may lead to the creation of new rays). Since very few of the potential rays of light emitted from light sources might end up reaching the camera, a common optimization is to trace hypothetical rays of light in the opposite direction. That is, a ray of light is traced starting from the camera into the scene, and back through interactions with geometry, to see if it ends up back at a light source. This is usually referred to as backwards ray tracing.

Nonetheless, since its first use as a graphics technique by Turner Whitted in 1980, much research has been done on acceleration schemes for ray tracing; many of these focus on speeding up the determination of whether a light ray has intersected an arbitrary piece of geometry in the scene, often by storing the geometric database in a spatially organized data structure. Ray tracing has also shown itself to be very versatile, and in the last decade ray tracing has been extended to global illumination rendering methods such as photon mapping and Metropolis light transport.

Photon mapping is a ray tracing technique used to realistically simulate the interaction of light with different objects. It was pioneered by Henrik Wann Jensen. Specifically, it is capable of simulating the refraction of light through a transparent substance, such as glass or water, diffuse inter-reflections between illuminated objects, and some of the effects caused by particulate matter such as smoke or water vapor.

In the context of the refraction of light through a transparent medium, the desired effects are called caustics. A caustic is a pattern of light that is focused on a surface after having had the original path of light rays bent by an intermediate surface.

With photon mapping (most often used in conjunction with ray tracing) light packets (photons) are sent out into the scene from the light source (reverse ray tracing) and whenever they intersect with a surface, the 3D coordinate of the intersection is stored in a cache (also called the photon map) along with the incoming direction and the energy of the photon. As each photon is bounced or refracted by intermediate surfaces, the energy gets absorbed until no more is left. We can then stop tracing the path of the photon. Often we stop tracing the path after a pre-defined number of bounces, in order to save time.

Also to save time, the direction of the outgoing rays is often constrained. Instead of simply sending out photons in random directions (a waste of time), we send them in the direction of a known object that we wish to use as a photon-manipulator to either focus or diffuse the light.

This is generally a pre-process and is carried out before the main rendering of the image. Often the photon map is stored for later use. Once the actual rendering is started, every intersection of an object by a ray is tested to see if it is within a certain range of one or more stored photons and if so, the energy of the photons is added to the energy calculated using a more common equation.

There are many refinements that can be made to the algorithm, like deciding where to send the photons, how many to send and in what pattern. This method can result in extremely realistic images if implemented correctly.

Paul Debevec earned degrees in Math and Computer Engineering at the University of Michigan in 1992 and a Ph.D. in Computer Science at UC Berkeley in 1996 [1]. He began working in image-based rendering in 1991 by deriving a textured 3D model of a Chevette from photographs for an animation project. At Interval Research Corporation he contributed to Michael Naimark’s Immersion ’94 virtual exploration of the Banff National forest and collaborated with Golan Levin on Rouen Revisited, an interactive visualization of the Rouen Cathedral and Monet’s related series of paintings. Debevec’s Ph.D. thesis presented an interactive method for modeling architectural scenes from photographs and rendering these scenes using projective texture-mapping. With this he led the creation of a photorealistic model of the Berkeley campus for his 1997 film The Campanile Movie whose techniques were later used to create the virtual backgrounds for the “bullet time” shots in the 1999 Keanu Reeves film The Matrix.

Since his Ph.D. Debevec has worked on techniques for capturing real-world illumination and illuminating synthetic objects with real light, facilitating the realistic integration of real and computer generated imagery. His 1999 film Fiat Lux placed towering monoliths and gleaming spheres into a photorealistic reconstruction of St. Peter’s Basilica, all illuminated by the light that was actually there. For real objects, Debevec led the development of the Light Stage, a device that allows objects and actors to be synthetically illuminated with any form of lighting. In May 2000 Debevec became the Executive Producer of Graphics Research at USC’s Institute for Creative Technologies, where he directs research in virtual actors, virtual environments, and applying computer graphics to creative projects.

In 2001 Paul Debevec received ACM SIGGRAPH’s first Significant New Researcher Award for his Creative and Innovative Work in the Field of Image-Based Modeling and Rendering, and in 2002 was named one of the world’s top 100 young innovators by MIT’s Technology Review Magazine.

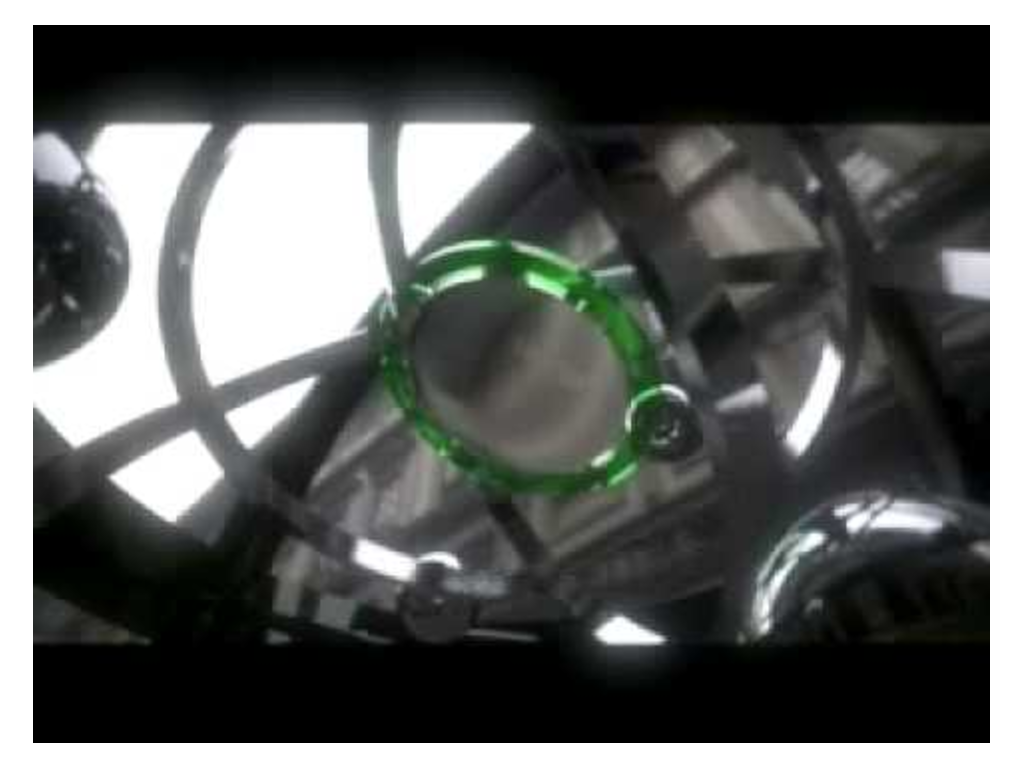

Movie 19.13 Fiat Lux – The Movie

http://www.pauldebevec.com/FiatLux/movie/

Produced in 1999 by Paul Debevec showing elaborate lighting techniques

Kajiya, Jim, The Rendering Equation, Computer Graphics, 20(4):143-150, Aug. 1986.

Cindy Goral, Ken Torrance, Don Greenberg and B. Battaile, Modeling the interaction of light between diffuse surfaces. Computer Graphics, V18, #3, July 1984.

Paul Debevec is the consummate researcher and is very prolific in publishing and filmmaking. An extended list of his research, projects and films is at

http://www.pauldebevec.com

His publication list can be found at

http://ict.debevec.org/~debevec/Publications/

Movie 19.14 The Campanile Movie

http://www.youtube.com/watch?v=RPhGEiM_6lM

Movie 19.15 Rendering with Natural Light (Radeon implementation)

http://www.youtube.com/watch?v=fW_GPCR9_GU

Animation World Network published an article titled Paul Debevec’s Journey to the Parthenon in 2004, the year his movie of the Parthenon was shown in the Siggraph Film Festival.

Movie 19.16 Simulation of the Parthenon Lighting

https://www.youtube.com/watch?v=G1D5hbxa8CM&list=PL8EhRiZ0XTxdJlf4sIi_3lIpe8UtqfdXk&index=6&t=0s

Movie 19.17 Presentation by Paul Debevec

Achieving Photo-Real Digital Actors

Palo Alto Film Festival

http://www.youtube.com/watch?v=VKx_DZp_0EI

- from Paul Debevec's bio at http://www.debevec.org/ ↵