Chapter 15: Graphics Hardware Advancements

15.3 Graphics Accelerators

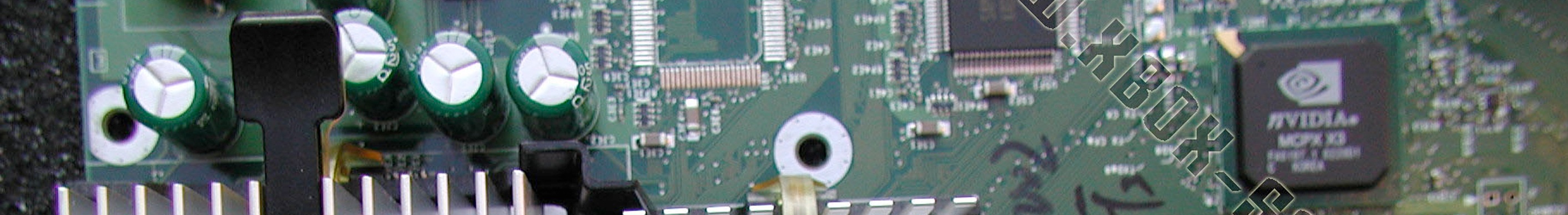

Graphics accelerator boards that were developing at this time included hardware acceleration for many of the image synthesis operations, hardware pan and zoom, antialiasing, alpha channels for compositing, scan conversion, etc. The concept of adding a coprocessor to take the graphics operations away from the CPU was instrumental in bringing complex graphics images to the masses. The early frame buffers and the later accelerator boards associated with them are now embodied in the graphics cards in today’s computers, such as those manufactured by NVIDIA, 3Dlabs and ATI.

HowStuffWorks.com has a reasonable explanation of the emerging role of these graphics coprocessors:

How Graphics Boards Help

Since the early days of personal computers, most graphics boards have been translators, taking the fully developed image created by the computer’s CPU and translating it into the electrical impulses required to drive the computer’s monitor. This approach works, but all of the processing for the image is done by the CPU — along with all the processing for the sound, player input (for games) and the interrupts for the system. Because of everything the computer must do to make modern 3-D games and multi-media presentations happen, it’s easy for even the fastest modern processors to become overworked and unable to serve the various requirements of the software in real time. It’s here that the graphics co-processor helps: it splits the work with the CPU so that the total multi-media experience can move at an acceptable speed.

As we’ve seen, the first step in building a 3-D digital image is creating a wireframe world of triangles and polygons. The wireframe world is then transformed from the three-dimensional mathematical world into a set of patterns that will display on a 2-D screen. The transformed image is then covered with surfaces, or rendered, lit from some number of sources, and finally translated into the patterns that display on a monitor’s screen. The most common graphics co-processors in the current generation of graphics display boards, however, take the task of rendering away from the CPU after the wireframe has been created and transformed into a 2-D set of polygons. The graphics co-processor found in boards like the VooDoo3 and TNT2 Ultra takes over from the CPU at this stage. This is an important step, but graphics processors on the cutting edge of technology are designed to relieve the CPU at even earlier points in the process.

One approach to taking more responsibility from the CPU was done by the GeForce 256 from NVIDIA (the first graphics processing unit, or GPU). In addition to the rendering done by earlier-generation boards, the GeForce 256 added transformation of the wireframe models from 3-D space to 2-D display space as well as the work needed to compute lighting. Since both transformations and rendering involve significant floating point mathematics (called “floating point” because the decimal point can move as needed to provide high precision), these tasks require a large processing burden by the CPU. Because the graphics processor doesn’t have to cope with many of the tasks expected of the CPU, it can be designed to do those mathematical tasks very quickly.

3dfx introduced their GPU, the Voodoo in 1996. The Voodoo5 took over another set of tasks from the CPU. 3dfx called the technology the T-buffer. This technology focused on improving the rendering process rather than adding additional tasks to the processor. The T-buffer was designed to improve anti-aliasing by rendering up to four copies of the same image, each slightly offset from the others, then combining them to slightly blur the edges of objects to minimize the “jaggies” that can plague computer-generated images. The same technique was used to generate motion-blur, blurred shadows and depth-of-field focus blurring. All of these produced smoother-looking, more realistic images that animators and graphic designers wanted. The object of the Voodoo5 design was to do full-screen anti-aliasing while still maintaining fast frame rates.

The technical definition of a GPU is “a single chip processor with integrated transform, lighting, triangle setup/clipping, and rendering engines that are capable of processing a minimum of 10 million polygons per second.” (NVIDIA)

The proliferation of highly complex graphics processors added a significant amount of work to the image making process. Programming to take advantage of the hardware required knowledge of the specific commands in each card. Standardization became paramount in the introduction of these technologies. One of the most important contributions in this area was the graphics API.

The Application Programming Interface (API) is an older computer science technology that facilitated exchanging messages or data between two or more different software applications. In other words, the API was the virtual interface between two interworking software functions, for example between a word processor and a spreadsheet. This technology was expanded from simple subroutine calls to include features that provided for interoperability and system modifiability in support of the requirement for data sharing between multiple applications.

The API is a set of rules for writing function or subroutine calls that access functions in a library. Programs that use these rules or functions in their API calls can communicate with any others that use the API, regardless of the others’ specifics. In the case of graphics APIs, they essentially provided access to the rendering hardware embedded in the graphics card. Early APIs included X, Phigs, Phigs+ and GL. In 1992, SGI introduced OpenGL, which became the most widely used API in the industry. Other approaches were Direct3D and vendor specific approaches, like Quartz for the Macintosh and the Windows API.

Movie 15.2 Dawn

https://www.youtube.com/watch?v=4D2meIv08rQ

Demo was released in 2002 to showcase the release of the NVIDIA GeForce FX series of graphics acceleration cards.